Don’t Play It Safe: A CX Leader’s Guide to Evaluating AI Automation

Alhena.ai Founder and CEO, Ashu Dubey with an opinion piece that talks about what CX leaders can do to make better decisions while evaluating AI automation solutions.

Overly cautious configurations are quietly killing the value of automation. Here’s what you can do instead.

Over the last few months, I’ve had a front-row seat to how CX leaders are evaluating AI automation tools. Some are doing it right. Pushing for scale, rigorously testing, defining clear metrics.

But too many are playing it safe.

They run cautious pilots with overly narrow scopes.They configure handoffs at the slightest ambiguity.And when it’s time to assess impact? They’re underwhelmed.

This post is a call to those leaders: If you’re going to invest in AI, don’t neuter it before it even starts.

Here’s what we’ve learned about how to evaluate CX automation solutions for actual impact and not just optics.

🧪 Start With Stress Tests, Not Demos

Forget the polished demo flow. Ask to test the system on your real-world queries, pulled from your actual support logs.

Test it on:

- Edge cases

- Unexpected phrasing

- Emotional tickets

- Multi-turn prompts

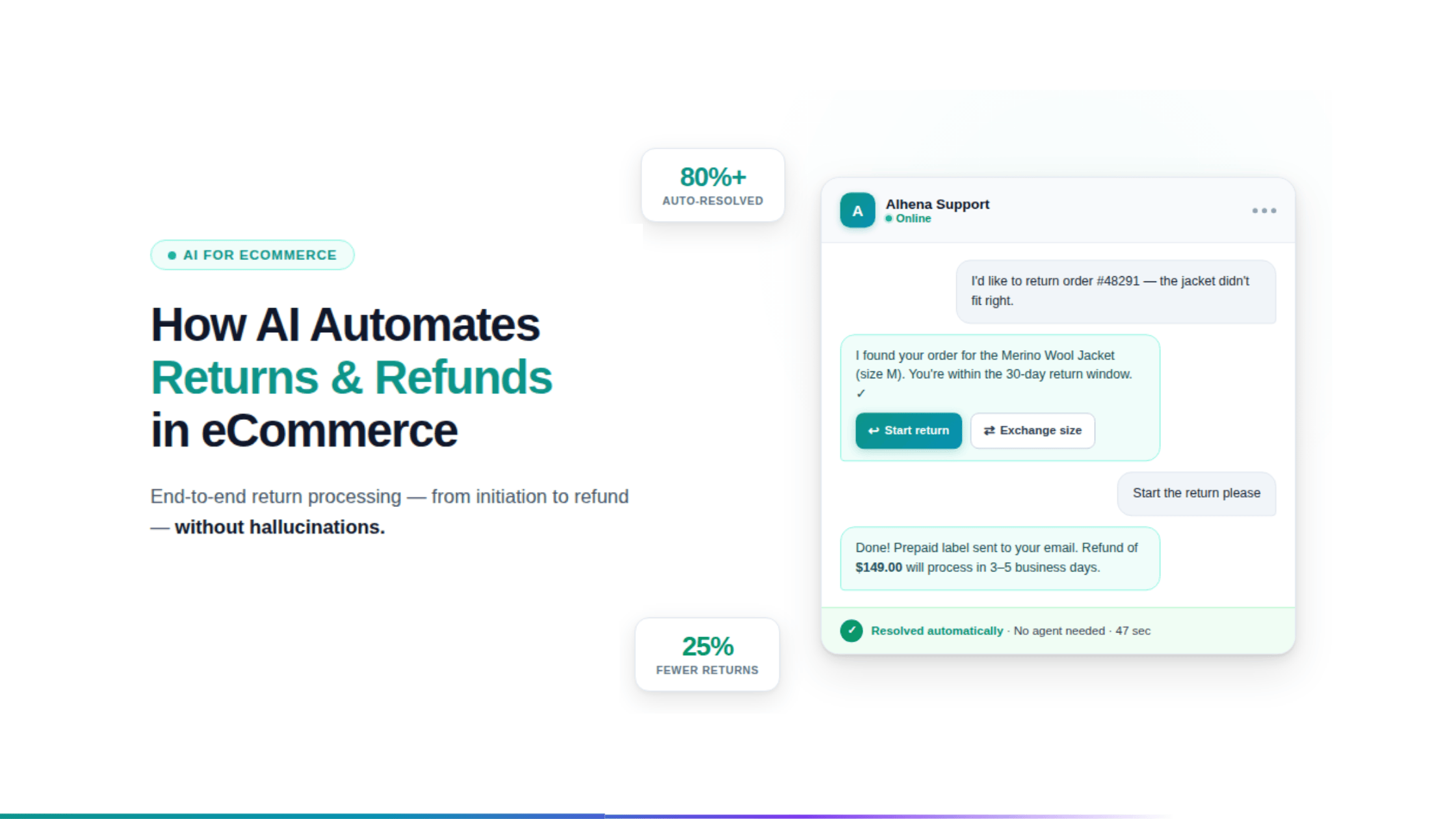

And measure not just “can it respond,” but can it resolve (without escalation, without hallucination, and with tone intact).

Ask vendors:

“What percentage of my historical volume can your AI handle end-to-end without human intervention?”

You’ll be surprised how few have a clear answer.

🎯 Define What Success Means Before You Start

Containment alone isn’t enough. You need to know:

- What % of traffic do we expect to automate?

- What kind of tickets should always go to humans?

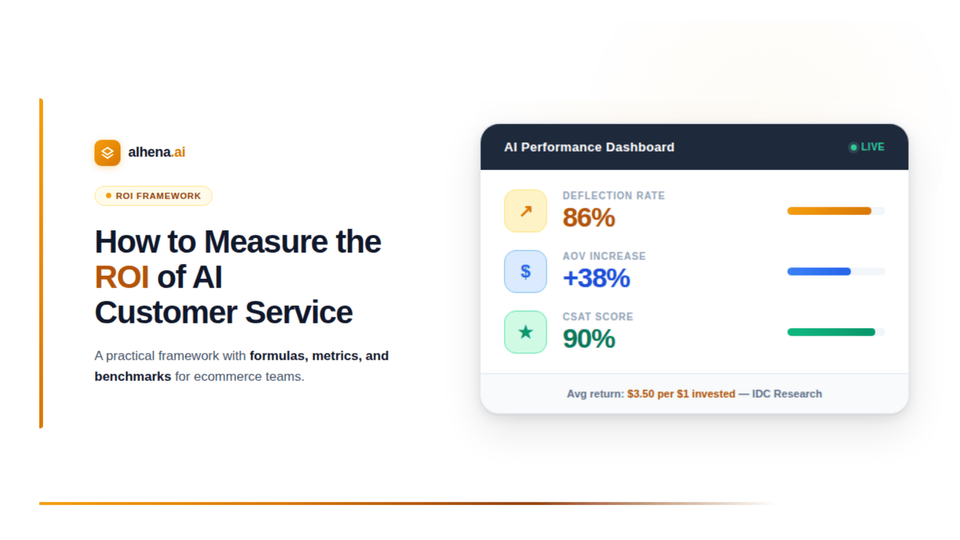

- What CSAT, resolution time, or cost savings would make this worthwhile?

- What business metrics you want it to drive? In some cases, that can mean increase in Online Sales via Shopping AI, in other cases, it may mean generating more leads for your sales team.

Without this clarity, your pilot will drift. And you’ll waste the most important months of adoption the ones where your org is paying attention.

🤝 Push for Safe Automation, Not Safe Handoffs

There’s a difference between safe automation and safety-first handoff logic.

The first means:

- Well-grounded answers

- Smart fallbacks (“I’m not sure, here’s the best next step”)

- Clear escalation when needed, not by default

The second means:

- Offloading everything even slightly uncertain

- Overwhelming your agents with avoidable volume

- Failing to build trust in the AI layer

Safe automation is possible. But it requires intention, not fear.

🔌 Compatibility and Adoption Still Matter

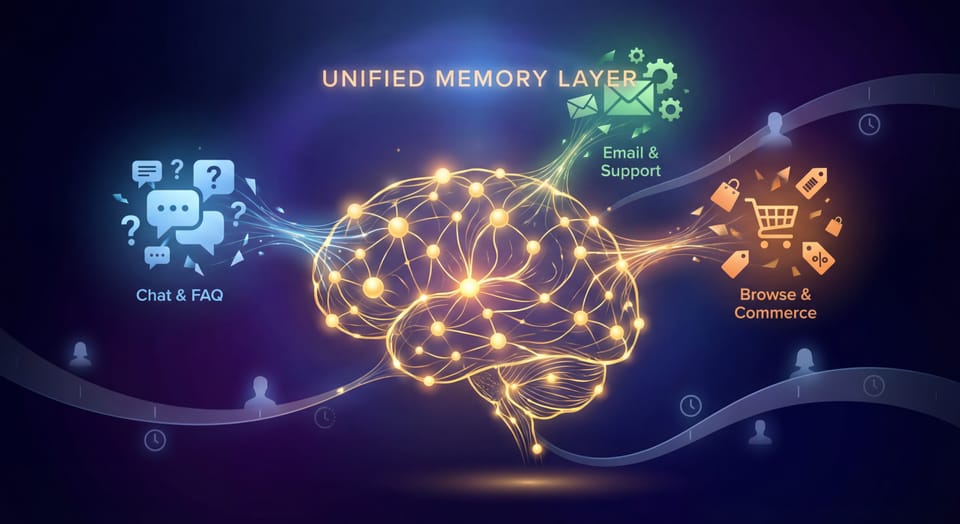

Even the smartest automation won’t deliver if:

- It doesn’t plug into your tech stack

- It takes weeks of training to go live

- Your team avoids using it because it’s too fragile

Look for solutions that make it easy to:

- Update responses without retraining models

- Plug into your stack (Shopify, Zendesk, etc).

- Pull from your existing product and policy data

💬 Final Thought

You don’t buy AI for a demo. You buy it to create business impact, improve margins, reduce load, and serve better.

So run stress tests. Ask bold questions. Push your vendor for real automation and not just AI optics.

And above all, don’t play it safe, play it smart.

👉 Want an AI automation solution that automates everything that can be automated, with a hallucination free guarantee? Block some time to check it out here.