Top 5 Reasons AI Assistants Break in Production (and How to Fix Them)

Discover the top 5 reasons AI assistants break in production, from hallucinations to fragile workflows, and learn how to fix them with real-time data, contextual memory, and fault-tolerant orchestration. See how Alhena AI delivers accurate, reliable, and hallucination-free AI customer service.

AI assistants have quickly moved from “cool demo” to mission-critical engines powering eCommerce support, product discovery, and customer engagement. But as businesses scale, many teams discover the same frustrating truth: AI assistants that perform perfectly in staging often break in real-world production.

Whether it’s hallucinations, wrong product information, missing context, or outright crashes, these failures impact conversions, trust, and customer experience.

In this guide, we break down the top 5 reasons AI assistants fail in production, based on industry research, engineering case studies, and real eCommerce deployments, and how to fix them with a modern, reliable platform like Alhena AI.

#1: Outdated or Incomplete Knowledge Base

Most AI assistants rely on static FAQs, old CMS exports, or manually uploaded PDFs. Without continuous updates, even the most advanced models begin to:

- Cite outdated facts

- Provide inaccurate or misleading responses

- Confidently hallucinate missing details

- Generate “plausible” but wrong answers

This occurs because when an AI model lacks real data, it defaults to probabilistic text generation, a major cause of confabulation.

How to Fix It

To avoid knowledge drift:

- Use real-time data ingestion from catalog, CRM, inventory, and policy systems

- Continuously refresh data indexes

- Implement Retrieval-Augmented Generation (RAG)

- Ground the model in verified sources instead of relying solely on generated text

How Alhena Solves This

Alhena’s system continuously syncs your live catalogs, CMS pages, CRM data, and help-center content. This grounding ensures the AI generates accurate, current output instead of hallucinated instances or outdated references.

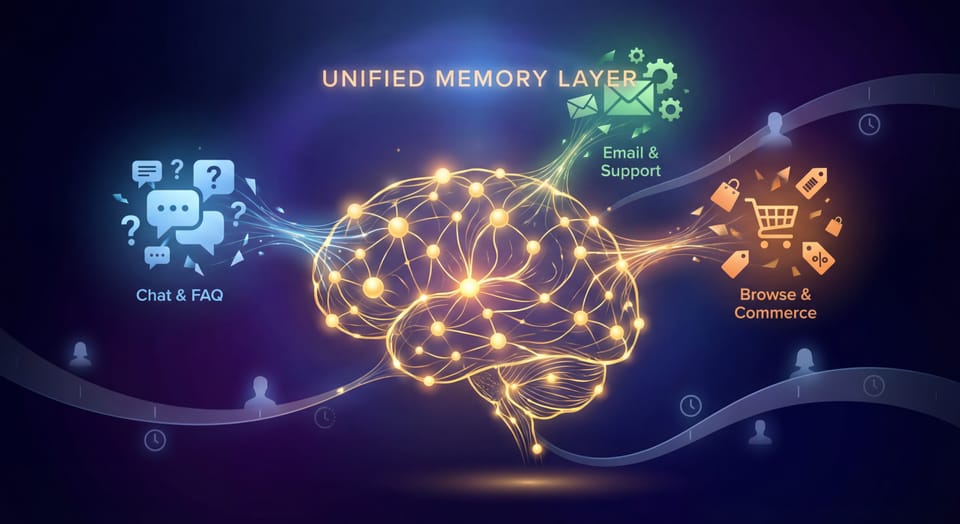

#2: Stateless Design: Missing Conversation Context

Many off-the-shelf chatbots are “stateless” they treat each user query as if it’s the first. This leads to:

- Repeated questions (“Didn’t I already tell you that?”)

- Disconnected responses

- Loss of personalization

- Broken multistep conversations

- Higher hallucination risk because missing context forces the model to guess

How to Fix It

Production-ready assistants must have:

- Multi-turn memory

- Session-level state retention

- Long-term preference storage

- Omnichannel continuity across chat, email, social, and voice

These enhancements reduce the model’s tendency to misinterpret inputs or generate misleading responses.

How Alhena Solves This

Alhena’s assistant maintains memory across every surface voice, chat, email, and social. This prevents context loss and avoids plausible-sounding but incorrect answers caused by missing context.

#3: Fragile Architecture & Poor Workflow State Management

Most assistant failures have nothing to do with the LLM, they happen because the surrounding system breaks.

Common failure points include:

- API timeouts

- Crashes in microservices

- Failed CRM or payment integrations

- Incomplete or stuck workflow states

- Missing retries and idempotency

- Lost conversation state during container restarts

When workflows fail, the AI often “fills the gap” with hallucinated next steps or incomplete instructions.

How to Fix It

AI assistants need:

- Durable, recoverable workflows

- Idempotent actions

- State checkpointing

- Fault-tolerant orchestration

- Verified data retrieval before generating responses

How Alhena Solves This

Alhena provides enterprise-grade orchestration, stable connectors, and resilient workflows so the AI never “guesses” due to broken backend systems. It retrieves verified data rather than inventing it.

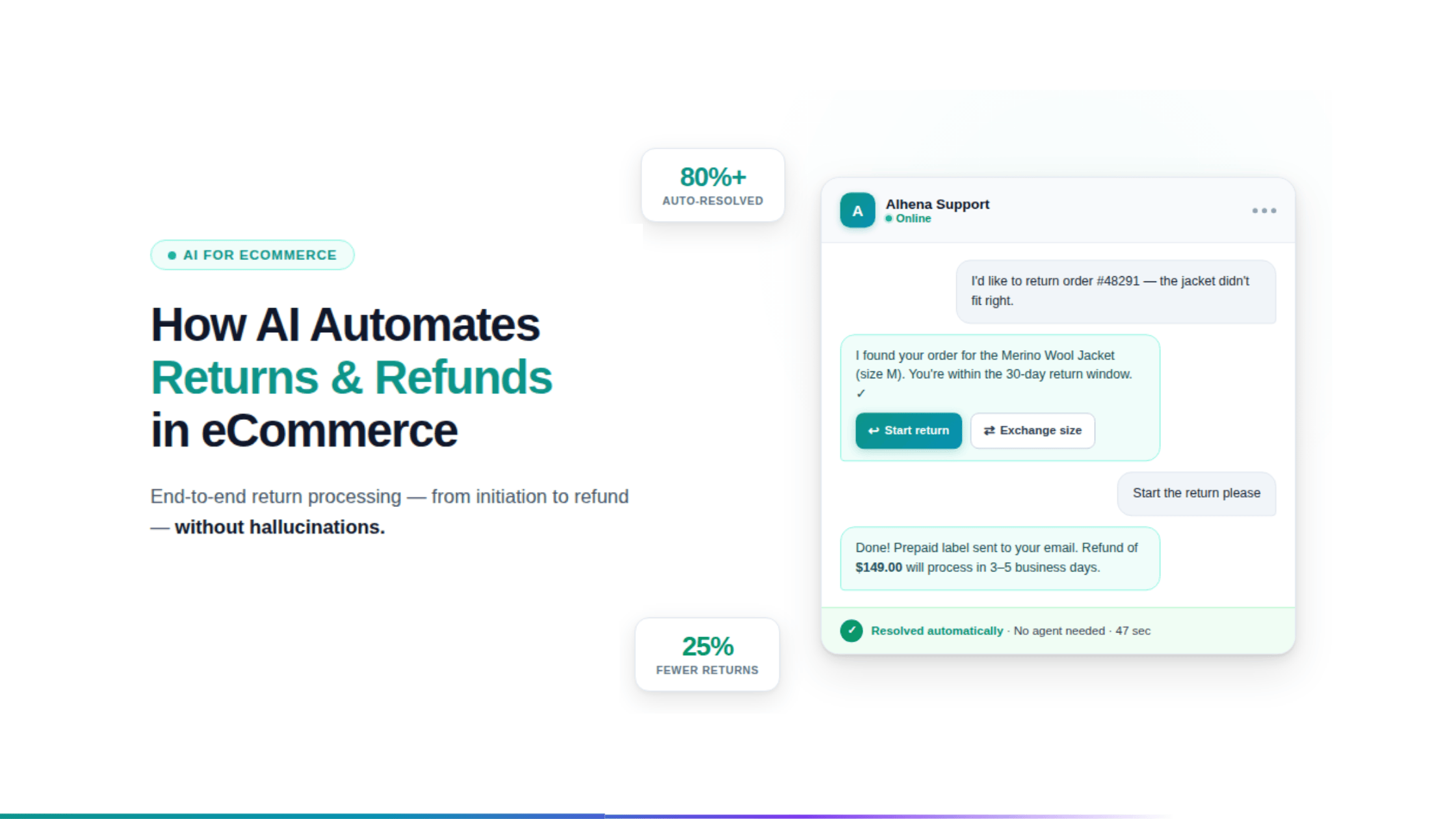

#4: Hallucinations & Inaccurate Responses

Hallucinations are the most researched generative AI failure mode. They occur when:

- Retrieval fails

- Prompts lack clarity

- The model overgeneralizes

- Bias or RLHF tuning distorts outputs

- The assistant must answer without enough grounding

This leads to:

- Confident but false answers

- Fabricated product specifications

- Incorrect troubleshooting steps

- Nonsensical or partially correct information

How to Fix It

Reduce hallucinations using:

- Proper RAG pipelines

- Verified knowledge sources

- Output-validation layers

- Watchdog detection models

- Rule-based fallback logic

How Alhena Solves This

Alhena is designed to be hallucination-resistant with:

- Watchdog systems that detect and block unverified output

- A retrieval-first architecture (retrieve before generate)

- Business-rule enforcement

- Strict grounding in real product and policy data

This ensures users only receive trusted, correct responses.

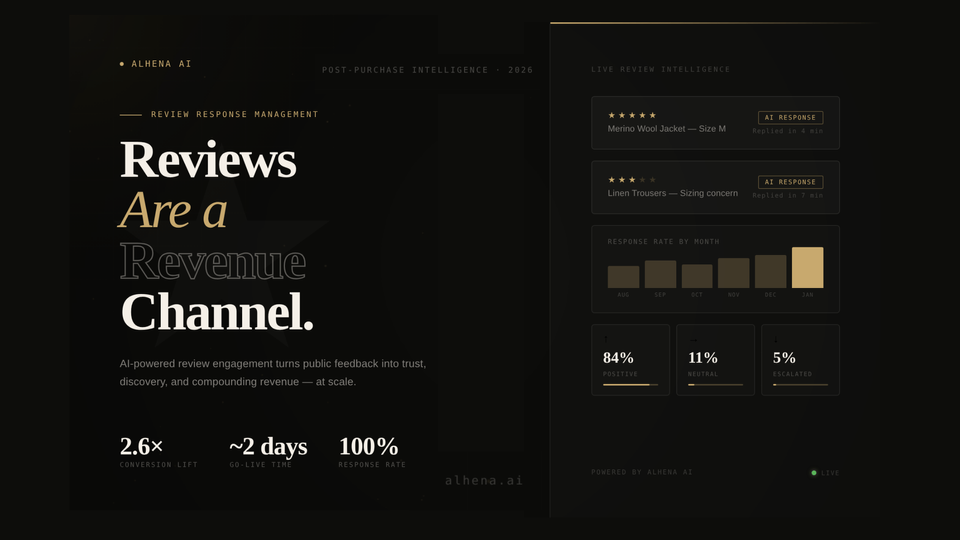

#5: Lack of Monitoring, Analytics & Continuous Improvement

Many assistants go live with no monitoring at all. This means teams cannot:

- Track accuracy

- Detect hallucinations

- Identify weak prompts

- Monitor RAG success rates

- Observe model drift

- Understand where users fail

By the time customers complain, the damage is done.

How to Fix It

You need:

- Hallucination-frequency tracking

- Query logs and error detection

- Sentiment analysis

- Prompt and workflow analytics

- Ongoing model evaluation

How Alhena Solves This

Alhena provides real-time dashboards that allow teams to track accuracy, detect anomalies, and refine workflows, closing the loop so the assistant remains reliable.

Conclusion: Build AI Assistants That Don’t Break

AI chatbot assistant failures often arise from these issues and can lead to misinformation, misleading responses, and reduced user trust. But with the right architecture, grounded retrieval, contextual memory, reliable workflows, and real-time analytics, your assistant can be accurate, stable, and trustworthy.

Alhena AI solves these challenges out of the box, delivering an AI system that retrieves instead of hallucinating, validates instead of guessing, and scales without breaking. Book a Demo and see it in action.

FAQ

What is an AI hallucination and why does it happen?

An AI hallucination occurs when a generative AI model produces confident but FALSE, misleading, or nonsensical output not grounded in real data. This phenomenon happens when the AI system lacks accurate retrieval sources, overgeneralizes from its training data, or attempts to generate text from probabilities rather than facts. Without grounding, the AI may hallucinate plausible-sounding responses that mislead users, a common type of confabulation in artificial intelligence.

How can RAG and grounding reduce hallucinated outputs?

Retrieval-Augmented Generation (RAG) feeds the AI verified knowledge before it generates a response. This ensures the model retrieves instead of invents information. Grounding in real-time content significantly improves accuracy and prevents the model from hallucinating missing information. Alhena AI uses strict RAG pipelines, ensuring answers remain fact-checked.

What kind of orchestration do AI assistants need in production?

Alhena AI provides durable workflow engines, reliable integration management, fault-tolerant pipelines, real-time retrieval layers, and safe fallback logic by default.

Why do AI chatbots hallucinate, and how can businesses prevent it?

AI chatbots hallucinate when the underlying LLM lacks enough data or context and starts guessing the most likely next words instead of providing factual information. This usually happens with outdated knowledge, missing retrieval, or unclear prompts. Businesses can prevent this by grounding the AI with RAG, using live and verified data sources, and applying hallucination-detection filters all of which platforms like Alhena AI provide out of the box to keep responses accurate and reliable.

How does Alhena AI ensure workflow reliability and fault tolerance?

Alhena AI ensures workflow reliability and fault tolerance by combining real-time error correction, resilient infrastructure, and intelligent orchestration. Its self-correcting AI agent automatically detects and fixes workflow issues, edge inference and caching keep responses fast even during spikes, and autoscaling with load forecasting prevents downtime. Alhena also supports smooth asynchronous handoffs between AI and human agents, ensuring conversations stay uninterrupted and stable across every channel.